Notice: FY2024 is coming in Feb 2024.

Highlights

US Treasury Breach: 400+ PCs hit.

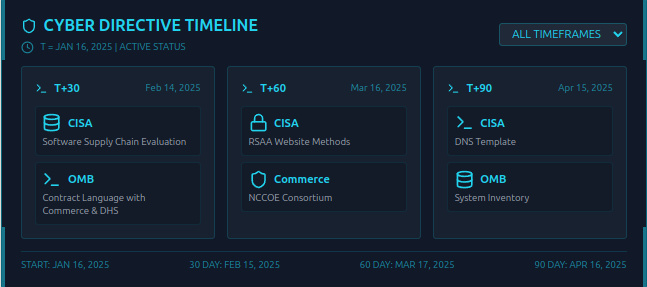

Biden: 40-page of AI, quantum & security.

Nvidia: NeMo Guardrails for agentic AI.

NIST: Updated AI guidelines.

Microsoft: Red teaming AI insights.

Special: Joint Security Guideline for Tech owner and operators.

Deep Dive

1. US Treasury Breach: Tom's Hardware

Hackers accessed over 400 PCs.

3,000+ unclassified files were compromised.

Secretary’s device was accessed, plus other top officials.

Linked to group Salt Typhoon.

First discovered December 8th by 3rd party vendor.

Mitigation: Enhance endpoint security, MFA is a must.

US Treasury hack, 2024 Top Settlements, Apple $95M settlement, 15yrs of HIPPA, US taskforce report.

2. Biden Executive Order: Wired NPR Forbes White House

40-page order focuses on digital infrastructure.

Mandates for secure software development.

Digital identity documents, quantum cryptography.

AI to bolster cybersecurity.

Many deadlines for new admin to meet.

Mitigation: Review and prepare for EO compliance deadlines.

3. Nvidia NeMo Guardrails for AI: VentureBeat

Agentic AI is expected to be trendy in 2025.

Content safety, topic control and jailbreak detection.

Uses Colang policy language.

Monitors AI conversation contextually.

Open-source; commercial support is available.

Mitigation: Evaluate if NeMo Guardrails fit for your AI needs.

4. NIST AI 800-1 Updated Guidelines: NIST

Second draft of misuse risk guidelines.

Best practices for evaluations.

Detailed risk appendices (cyber, chemical, bio).

Marginal risk framework emphasized.

Supports open model development equally.

Notice: Provide public feedback by March 15th.

5. Microsoft AI Red Teaming Insights: Microsoft

100+ gen AI products, 8 lessons.

Simple attacks are effective.

AI red teaming != safety benchmarking.

System-wide perspective is key.

Automation important, human essential.

LLMs amplify existing risks.

Mitigation: Proactively adopt security practices.

6. (Bonus!) Hewlett Packard Enterprise Breach: Hackread

IntelBroker claims breach via DIRECT ATTACK.

For sale on dark net forums.

Stolen data includes source code, certs, PII.

Includes Docker builds, GitHub repos, APIs.

HPE not HP Inc. is the victim.

Mitigation: Investigate supply chain/vendor management NOW.

Share this post