Review: Secure Data for AI

tl;dr best practices for protecting training data and operate AI system

A recent joint cybersecurity information sheet (CSI), authored by leading agencies including the NSA, CISA, FBI, and their international counterparts (ASD, ACSC, NCSC), provides essential guidance on this crucial topic.

This is a strategic overview, focusing on the lifecycle of AI data and the vulnerabilities that can emerge at each stage. It’s geared towards organizations using AI in critical operations, emphasizing the protection of sensitive, proprietary, or mission-critical data.

Why This Matters: Data is #1 important

The document underscores a fundamental truth: AI is only as good as the data it learns from. Compromised data can lead to inaccurate predictions, biased outcomes, and even malicious behavior.

Three significant areas of concern:

Data Supply Chain: Where does your data come from? Is it trustworthy?

Maliciously Modified (“Poisoned”) Data: Can an attacker intentionally corrupt your training data?

Data Drift: Does the data your AI system encounters in the real world change over time, degrading its performance?

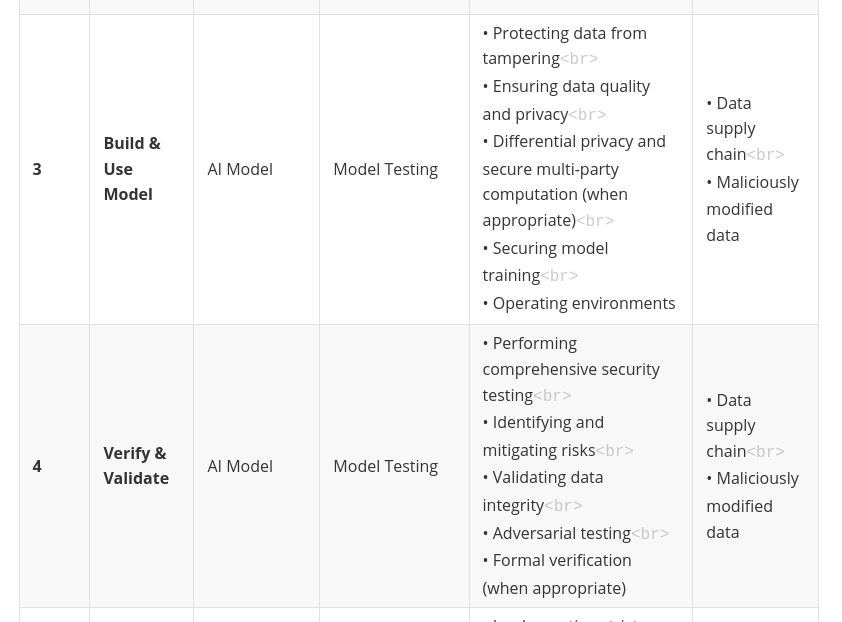

AI System Lifecycle Stages

Key Points

Data security is critical throughout all stages of the AI lifecycle.

Three major data security risks are consistently present: data supply chain vulnerabilities, maliciously modified data, and data drift.

Verification & Validation is required each time new data or user feedback is introduced.

Continuous monitoring in the final stage helps adapt to evolving threats.

The AI System Lifecycle: A Framework for Security

The CSI structures its guidance around the AI system lifecycle, aligning with the NIST AI Risk Management Framework (RMF). This is a smart move, providing a clear and actionable framework for organizations to implement security measures. Here’s a breakdown of the key considerations at each stage:

Plan & Design: Security needs to be baked in from the beginning. This includes threat modeling, privacy-by-design principles, and robust data governance policies.

Collect & Process: Data provenance is critical. You need to know where your data came from, how it was collected, and whether it has been tampered with.

Build & Use: This is where the model is trained. Data sanitization, anomaly detection, and secure training pipelines are essential.

Verify & Validate: Rigorous testing is needed to identify vulnerabilities and ensure the model performs as expected.

Deploy & Use: Strict access controls, secure storage, and monitoring for anomalous behavior are crucial.

Operate & Monitor: Continuous monitoring for data drift and ongoing risk assessments are essential to maintain security over time.

Best Practices: A Toolkit for AI Data Security

Foundational Practices (Across the Lifecycle):

Data Encryption: Secure data at rest, in transit, and during processing.

Access Control: Implement strict access controls to limit who can view/modify data.

Data Classification: Categorize data based on sensitivity to apply appropriate security measures.

Data Handling & Integrity:

Data Provenance Tracking: Know where your data comes from & its history.

Digital Signatures: Authenticate data & verify its integrity.

Data Sanitization: Cleanse data to remove errors & malicious inputs.

Anomaly Detection: Identify & remove suspicious data points.

Regular Data Audits: Continuously assess data quality & security.

Lifecycle-Specific Practices:

Plan & Design: Incorporate security from the start (threat modeling, privacy-by-design).

Collect & Process: Verify data sources & implement secure data transfer.

Build & Use: Secure training pipelines & monitor for data drift.

Deploy & Use: Secure storage, APIs, & monitor for anomalous behavior.

Operate & Monitor: Continuous risk assessments & incident response planning.

Advanced Practices:

Privacy-Preserving Techniques: Use techniques like data masking & differential privacy.

Trusted Infrastructure: Leverage zero-trust architectures & secure enclaves.

Secure Data Deletion: Use secure methods to erase data when no longer needed.

Data Quality Testing: Regularly assess and improve data quality.

Risks: Poisoning, Drift, and Supply Chain Attacks

The document dedicates significant attention to three specific threats:

Data Poisoning: This involves intentionally injecting malicious data into the training set to manipulate the model’s behavior. The CSI highlights the surprisingly low cost at which this can be achieved, making it a realistic threat.

Data Drift: As the real-world data distribution changes, the model’s performance can degrade. The CSI emphasizes the importance of continuous monitoring and retraining to address this issue.

Data Supply Chain Attacks: Compromising data sources, whether through malicious actors or simply outdated information, can introduce vulnerabilities into the AI system.

The CSI rightly points out the difficulty in distinguishing between data drift and deliberate poisoning. This ambiguity underscores the need for robust monitoring and incident response capabilities.

Here's what you should do now:

Read the Document: Familiarize yourself with the full CSI document: https://media.defense.gov/2024/May/20/2003439257/-1/-1/0/CSI-AI-DATA-SECURITY.PDF

Assess Your AI Systems: Identify the AI systems in your organization and map them to the AI lifecycle stages outlined in the CSI.

Conduct a Risk Assessment: Evaluate the potential risks to your AI data at each stage of the lifecycle.

Implement Best Practices: Prioritize and implement the best practices recommended in the CSI.

Stay Informed: Keep up-to-date on the latest AI security threats and mitigation strategies.

Securing AI data is an ongoing process, not a one-time fix. By embracing a proactive and holistic approach, organizations can harness the power of AI while mitigating the risks. The future of AI depends on it.

I hope this blog post is helpful! Let me know if you'd like me to refine any part of it or elaborate on specific areas. I can also help you brainstorm ideas for the visualizations.