Pass-by 2FA Gmail and Youtube, America Privacy Right Acts, Apple Spyware, RedTeam on LLMs.

8/4-14/4/2024

Hello, this week I continue to experiment the format of a newsletter. It could be very much longer than it was last time. Please let me know if you have any comments. Feel free to drop me a message via DM or a chatbox below.

Top news

More after my review about “Phishing without login page” last week. “a pattern emerging of people whose accounts have been hacked despite having 2FA activated and being unable to recover their accounts, you know something out of the ordinary is happening…” (Forbes, 14/3/2024) This appears to be a cryptocurrency “give away” scam targeting youtube, gmail and microsoft 365 (Source)

A bipartsian bill draft for a new online data privacy and protection (APRA) is introduced this week, mostly focused on consumers. “Users would also be allowed to opt out of targeted advertising, and have the ability to view, correct, delete, and download their data from online services. The proposal would also create a national registry of data brokers, and force those companies to allow users to opt out of having their data sold.”

Apple sent a notification to 92 countries about a mercenary Spyware attack. “Apple detected that you are being targeted by a mercenary spyware attack that is trying to remotely compromise the iPhone associated with your Apple ID -xxx-,” “This attack is likely targeting you specifically because of who you are or what you do. Although it’s never possible to achieve absolute certainty when detecting such attacks, Apple has high confidence in this warning — please take it seriously,”.

Education

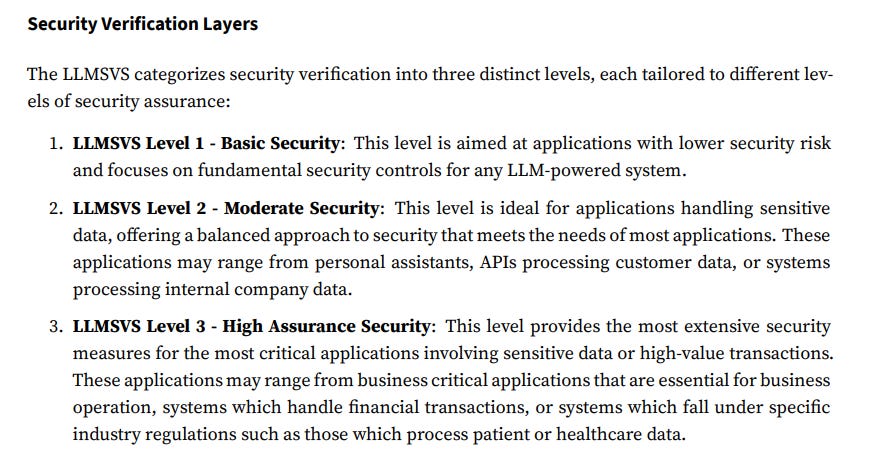

This week I completed a 9 day free course from Lekara.ai copperated with OWASP Top 10 for LLM applications. Plus, you can learn more the first draft of OWASP Large Language Model Security Verification Standard 0.1.

Review: Red team towards LLMs

Last week, chatGPT remove a login page. Any body around the world can try the service with GPT-3.5, soon GPT-4 , GPT-4 turbo, and also Gemini - 1.5 easier than ever. It will lead to more top GenAI concern that fuels human risk. (Source, Source)

Email phishing, spoofing and ransomware.

Deepfake Images to spread disinformation, misinformation, false information.

This week, I shift focused on Red team based on a new brand course on Coursera. This is a brief review with examples and demo.

Red team’s task is to simulate adversaries actions and tactics for testing and improving the effectiveness of an organization’s defenses.

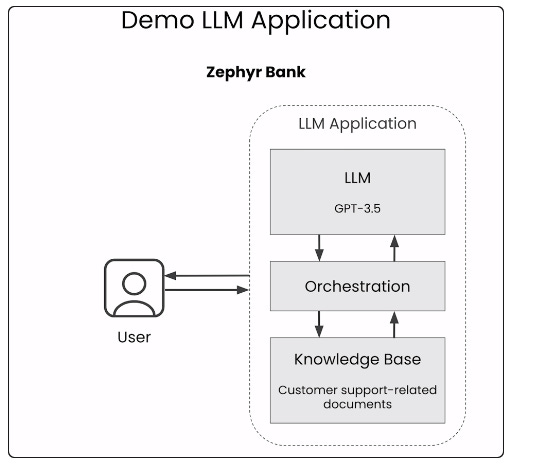

Demo with a bank.

Here they introduces a format to help AI avoid from bypassing or jailbreak AI. Honestly, how to protect gen AI is knowing how to jailbreak gen AI. If they can not jail break - Simply mean it is passed.

Automate a process to tackle prompt injections

Define a technique to inject a prompt.

Library of prompts.

Do a scan.

First, what does it help? Clear some assumption that fine-tune improves model quality but not its toxictiy; benchmark is not safety and security; Foundation model is not LLM app.

Examples:

Shared: Content: toxic and offensive; privacy and data security; criminal and illicit activities; bias and stereotypes;

Unique: inappropriate content; out of scope behavior; hallucinations; sensitive information disclosure; security vulnerabilities.

Tackling LLM application safety by asking “What could go wrong?” to anticipate the worst scenarios. Some material could help are

OWASP top 10

AI incident database

AVID