New: Follow us on Spotify to learn more and always stay updated!

This report from OpenAI summarizes the organization's efforts to identify and disrupt various attempts to use its AI models for malicious purposes.

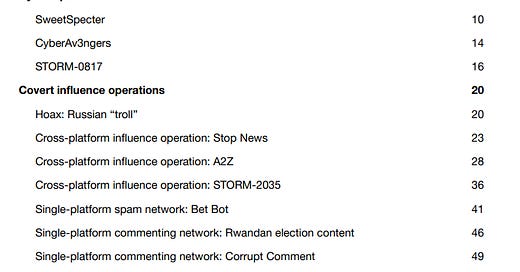

It details a range of operations, categorized by their objectives,

Cyber operations like the spear phishing campaign by SweetSpecter.

Covert influence operations like the Russia-origin Stop News network.

Single-platform spam networks like the Bet Bot operation.

The report also discusses the use of AI in elections and the challenges of detecting and preventing its abuse for harmful ends.

Main Themes:

AI's Role in Malicious Activities: The report focuses on the use of OpenAI's language models (primarily ChatGPT) by various threat actors, including state-sponsored groups and commercial entities, for a range of malicious activities.

Limited Impact of AI on Attack Sophistication: While AI models assist in various tasks, the report asserts that they have not led to "meaningful breakthroughs" in malware creation or audience building for threat actors.

Intermediate Phase Utilization: Threat actors primarily employ AI models in the intermediate phase of their operations, after acquiring basic tools (internet access, social media accounts) but before deploying finished products (malware, social media posts).

Election Interference with Limited Reach: The report analyzes several election-related influence operations utilizing AI, concluding their impact remained limited and did not achieve viral engagement or build sustained audiences.

AI Companies as Targets: OpenAI itself is targeted by hostile actors, as exemplified by the "SweetSpecter" case, highlighting the need for robust security within AI companies.

Most Important Ideas/Facts:

Threat actors utilize AI for tasks such as:Debugging malware (e.g., STORM-0817)

Generating content for social media (e.g., A2Z, Bet Bot)

Listen to this episode with a 7-day free trial

Subscribe to Secure GenAI to listen to this post and get 7 days of free access to the full post archives.

![[Available Now!] Book Report: Q3 2024 - GenAI Safety and Security](https://substackcdn.com/image/fetch/$s_!Nrmg!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F789f986a-4e77-4889-8d33-8d82c944c46d_950x707.png)